Introduction

“Apprendre à lire, c’est allumer du feu; toute syllabe épelée étincelle.”

— Victor Hugo

Reading and writing represent humankind’s principal tools for transmitting and retrieving information through space and time. The fact that you are reading this thesis, which was written at a certain point in time with a very precise aim, demonstrates the power conveyed by only 26 visual symbols (i.e., letters) ordered in a specific way. However, compared to spoken language, the system that enabled the development of reading and writing (i.e., orthography) is a relatively new invention in human evolution. As such, its role has long been neglected in the scientific study of language. Namely, despite its undisputable importance in collecting, storing and communicating knowledge, it seems almost impossible that some of the most prominent linguists of the 20th century did not even consider orthography to be a part of language (Rutkowska, 2017). According to Bloomfield for instance, orthography as an object of research does not belong to linguistics, and should therefore be completely removed from its scientific inquiry. Considered as being one of the leading voices responsible for the disregard of orthography in the scientific study of language, Bloomfield viewed written language only as a tool which was to be used to represent speech:

“Writing is not language, but merely a way of recording language by means of visible marks” (Bloomfield 1933: 21).

Similar views in line with this relativistic school of thought which postulates the complete reliance of written on spoken language, were shared by both Saussure, who considered orthography a secondary system, entirely dependent on speech:

“Langue et écriture sont deux systèmes de signes distincts ; l’unique raison d’être du second est de représenter le premier” [Language and writing are two distinct systems of signs; the second exists for the sole purpose of representing the first] (Saussure 1989: 45).

as well as Sapir, who viewed written language as a circulating medium used to express speech:

“The written forms are secondary symbols of the spoken ones — symbols of symbols — yet so close is the correspondence that they may, not only in theory but in the actual practice of certain eye-readers and, possibly, in certain types of thinking, be entirely substituted for the spoken ones.” (Sapir 1921: 20).

This view has later been abandoned in favour of a more autonomistic status of orthography according to which writing does more than just represent speech (see Liuzza, 1996). Notwithstanding, the superiority of speech in relation to spelling1 can also be seen at the initial stages of psycholinguistic research of written language processing (Rastle, 2019). This primacy of speech over reading and writing, and in particular the complete reliance of written on spoken language, is best reflected in the fact that (silent) reading was initially seen as speech happening inside one’s head (i.e., inner speech; see, Huey, 1908). Moreover, even in the modern study of reading, the notion of “mental pronunciation” or phonological decoding, plays a central role in understanding how letters are converted into sounds (Rozin & Gleitman, 1977). With this in mind, and considering that speech (spoken language), precedes reading and writing (written language), not only phylogenetically (in the course of human evolution) but also ontogenetically (in the course of individual development), it is not surprising that mainly effects of spoken on written language have been studied in the psycholinguistic literature. We therefore start this introduction by presenting some of the key findings showing how our experience with spoken language affects written language processing (i.e., effects of phonology on orthographic processing). Note however that the focus of the present thesis is actually on the reverse effects (i.e., effects of orthography on phonological processing).

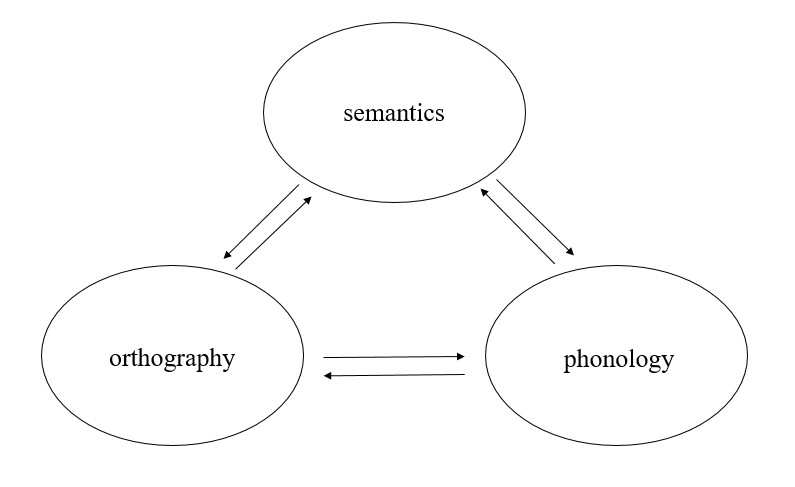

The impact of phonology on written language processing

Examples of how spoken language (i.e., phonology) impacts written language processing (i.e., visual word recognition or silent reading) can be found in everyday reading contexts. For example, skilled readers tend to overlook orthographic errors that match the phonology of the target word (i.e., pseudowords that are phonetically identical to a real word such as crane/crain) and accept these pseudohomophones as real words during reading. Similarly, they may need more time to read and comprehend sentences containing sound repetitions (i.e., tongue-twisters) even when reading “in their heads” (i.e., during silent reading). This anecdotal evidence is nevertheless supported by a vast amount of experimental findings showing that phonology is activated during written language processing (Berent & Perfetti, 1995; Lukatela & Turvey, 2000; Pexman, Lupker, & Reggin, 2002; for a review, see Brysbaert, 2022). As in the anecdotal example described above, the studies in question made use of homophones - words that sound the same (i.e., share phonology) but differ in their meanings and spellings (e.g., <rows> and <rose>) - to probe for the activation of phonology during written language processing. For example, Van Orden (1987) showed that skilled readers need more time to detect homophone errors in a semantic decision task. Participants in his study more often incorrectly classified MEET (homophone of MEAT) as a type of food as compared to MELT. As this homophony effect was present regardless of the orthographic similarity between the correct and the homophone word, the author argued that the effects were indeed driven by automatic activation of phonological information during early visual word recognition. Early and automatic activation of phonology in visual word recognition is further supported by findings showing faster recognition of words preceded by visual primes with the same pronunciation and similar spelling (e.g., <mayd> before <MADE>) as compared to primes with similar spellings only (e.g., <mard> before <MADE>; Perfetti, Bell, & Delaney, 1988). These homophone effects, replicated in a variety of experimental tasks and paradigms, such as letter detection (Drewnowski & Healy, 1982) or silent-proofreading task (Daneman & Stainton, 1991), were explained in the light of interactive models of reading and visual word recognition. According to these models, which all assume bidirectional links between orthographic and phonological codes (see Figure 0.1.), different representational levels interact during visual word recognition (Coltheart, Rastle, Perry, Langdon, & Ziegler, 2001; e.g., Plaut, McClelland, Seidenberg, & Patterson, 1996). Due to this interactive flow of activation, phonological representations get automatically activated by their orthographic counterparts during written word processing, and that regardless of their importance for the task at hand.

Figure 0.1: The Triangle Model of Word Reading. The triangle model of word reading adapted from Plaut, Seidenberg, McClelland, and Patterson (1996) assuming bi-directinal links between phonological, orthographic and semantic levels of representation.

Further evidence supporting the automatic activation of phonology in written language processing can be found in research done with bilinguals and second language learners. For instance, Friesen and Jared (2012) showed that bilinguals need more time to process written words that share the sound but not the spelling or the meaning across their two languages (i.e., interlingual homophones; e.g., the word /ʃuː/ written as ‘shoe’ which means cabbage in French) as compared to words specific to one of their languages. Cross-linguistic homophone effects were also found in Dutch-French bilinguals who showed similar priming effects when prime and target belonged to different languages (i.e., interlingual priming effects) as compared to when they belonged to only one (Brysbaert, Van Dyck, & Van de Poel, 1999). Importantly, these results hold regardless of the proficiency in a second language (Duyck, Diependaele, Drieghe, & Brysbaert, 2004).

Finally, research looking into readers with reading disorders such as developmental dyslexia, highlights the importance of phonology and phonological skills in written language processing (Saksida et al., 2016; for a review, see Snowling & Hulme, 2021). Given that readers with dyslexia show poor performance in tasks requiring phonological processing (e.g., phonological awareness tasks; Snowling, 1995), and that many reading researchers view issues with phonological processing as the core deficit of this reading disorder (for a review, see Snowling & Hulme, 2021), phonology is considered as being indispensable for successful processing of written language.

In summary, previous research shows pervasive effects of phonology on orthographic processing. It seems that our experience with spoken language cannot be shut down even when it is irrelevant for the task, as is the case during written language processing. Prominent models of reading (Coltheart et al., 2001) and visual word recognition (Plaut et al., 1996) all assume the existence of bidirectional links (as well as the flow of activation) between phonological and orthographic lexicon. In addition, written communication is nowadays just as important as speech. Thus, it was only a matter of time when the reverse effects, specifically, the effects of orthography on spoken language processing, would start being explored in the literature. The present thesis pertains to this line of research as it explores indirect effects of orthography on spoken word processing, and in particular, spoken word learning.

The impact of orthography on spoken language processing

The idea that orthography is involved in spoken language, and in particular, phonological processing, is closely tied to the notion of phonological awareness and the development of metalinguistic skills. Morais, Alegria and Bertelson (1979) were one of the first to reveal a link between phonological awareness and reading ability. They compared the performance of age-matched literate and illiterate adults in tasks involving addition and deletion of sounds from spoken words and nonwords. They showed that literates completed both tasks easily (i.e., the percentage of accuracy was close to ceiling when real words were used, and relatively high for nonwords), whereas accuracy in the group of illiterates was rather low. Following up on these findings, numerous studies reported a positive link between reading skills and the performance on phonological awareness tasks (Saksida et al., 2016; for a review, see Snowling & Hulme, 2021). In consequence, phonological awareness, defined as a person’s ability to perceive, think about, and manipulate individual speech sounds (phonemes) within spoken words (Snowling & Hulme, 1994), has been considered as being dependent on the acquisition of reading (Morais & Kolinsky, 2017; but see for example, Lundberg, Frost, & Peterson, 1991). Evidence showing that phonological awareness is indeed dependent on orthographic knowledge can be found in tasks in which relying on orthography actually comes with a processing disadvantage (Castles, Holmes, Neath, & Kinoshita, 2003; for similar results, see Tyler & Burnham, 2006). Castles and colleagues (Castles et al., 2003) manipulated the transparency of the to-be-removed sounds, and observed worse deleting performance for words containing sounds with opaque sound-to-letter correspondences (e.g., removing /n/ from <knuckle>) as compared to those with transparent links (e.g., removing /b/ from <buckle>). The debate on whether there is a causal relationship between phonological awareness and reading success is still ongoing in the literature. Nevertheless, there seems to be agreement in that the two skills develop in interaction and that the relationship between them is reciprocal (for a review, see Castles & Coltheart, 2004). In the reminder of this section we present evidence showing how learning to read affects speech processing. We will first review studies showing reading related differences in individual speech sound processing. Since the focus of this thesis is on spoken words, we will then move on to work reporting orthographic effects in spoken word processing.

The impact of orthography on speech sound processing

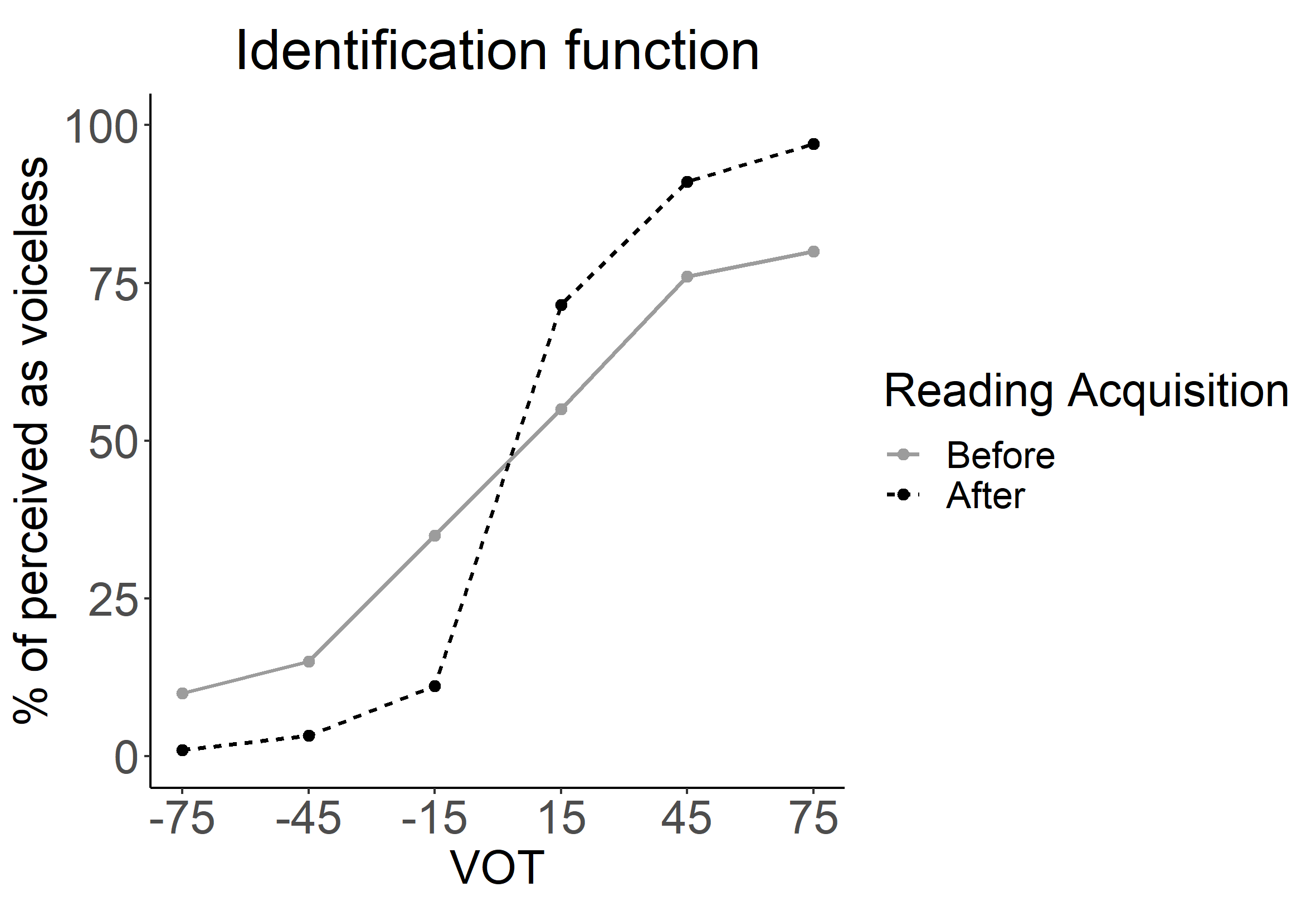

More evidence supporting the idea that reading acquisition enhances the perception of speech in terms of speech sounds is found in studies showing improvements in speech perception overlapping in time with the official onset of reading instruction. For instance, Hoonhorst and colleagues (2011; see also Hoonhorst et al., 2009), compared categorical perception (i.e., phoneme discrimination) of French-speaking adults and children (aged from 6-8 years). They showed that relative to first-grade and pre-reading kindergarten children, older children who were also more advanced readers, were better at discriminating two sounds from the same phonetic feature continuum (i.e., /d/ and /t/ sounds). This improvement was indicated by the increase in the steepness of the identification slope (see Figure 0.2). Kolinsky and colleagues (2021) have demonstrated recently that this increase in boundary precision (BP) is indeed due to the acquisition of literacy and is not driven by maturation and the related physical changes. With the aim to disentangle effects of maturation from those induced by reading acquisition, the authors tested beginning children readers, beginning adult readers, and skilled adult readers on the same categorical perception task employed by Hoonhorst and colleagues. Significant differences in BP observed between the two groups of adults, but not between children and adult beginning readers were interpreted as stemming from the acquisition of literacy (see Burnham, 2003 for more details on the reading hypothesis).

Figure 0.2: Expample of an Identification function on a VOT continuum. Visual representation of how boundary precision changes after reading acquisition based on simulated data. The improvement in boundary precision, and consequently, categorical perception, is manifested as the increase in the steepness of the identification function (dark dotted line).

Finally, in one of our recent studies, differences in speech sound processing related to reading acquisition emerged (Jevtović, Stoehr, Klimovich-Gray, Antzaka, & Martin, 2022). We tested 60 second-grade Spanish-speaking children on perception and production of three speech sounds. Crucially, these sounds differed in the number of graphemes they map onto in Spanish. Consistent sounds /p/ and /t/ have only one orthographic representation in Spanish (i.e., <p> and <t> respectively), while the inconsistent sound /k/ is associated with three different graphemes (i.e., <c>, <qu> and <k>). Both speech sound perception and production of consistent sounds were modulated by children’s reading skills. Better readers, who also developed stronger links between sounds and graphemes, were faster to perceive and produce consistent, but not inconsistent sounds.

These studies reveal changes in speech sound processing related to reading acquisition. In the next section we present changes in word processing induced by reading acquisition, and specifically, the acquisition of sound-to-spelling correspondences.

The impact of orthography on spoken word processing

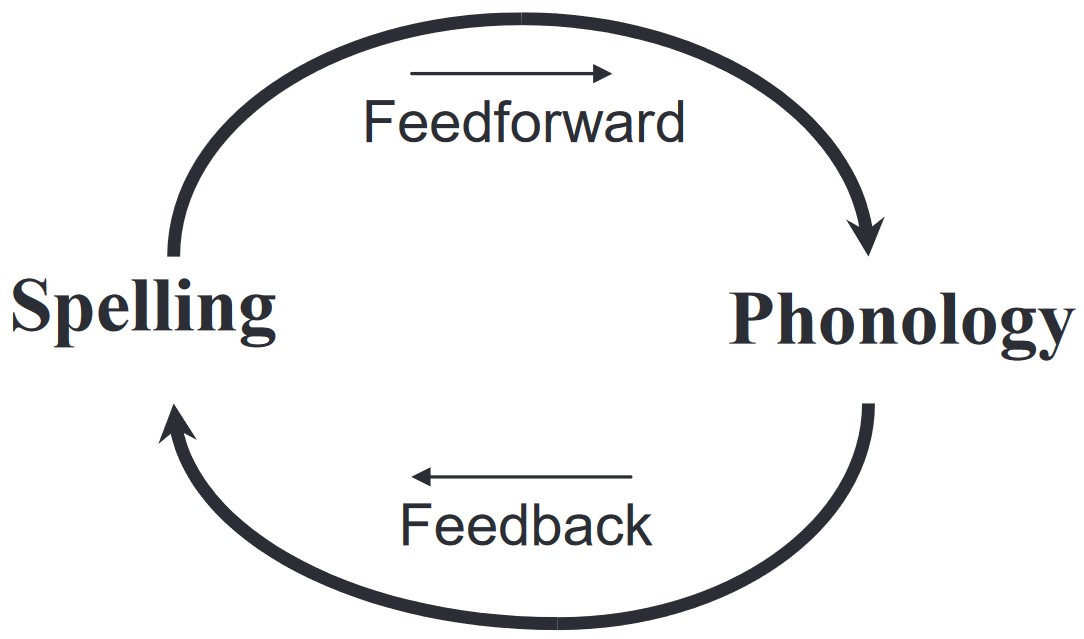

Another line of research showing the impact of orthography on speech processing comes from studies investigating how phonological and orthographic codes interact during visual word processing (see the previous paragraph). Previous research demonstrated that not only spelling-to-sound inconsistencies (i.e., orthographic representations with more than one possible pronunciation, hereafter feedforward inconsistencies), but phonology-to-spelling inconsistencies as well (i.e., phonological representations with more than one possible spelling, hereafter feedback inconsistencies; see Figure 0.3)2 hinder visual word recognition and reading (for a review, see Ziegler et al., 2008). Based on this finding, and in the light of interactive models proposing bi-directional links between different levels of representation (i.e., orthographic and phonological), researchers asked whether similar effects of feedback inconsistency can also be observed in the auditory domain. As a result, the studies in question made use of the fact that some words have consistent spellings (e.g., rhymes which can only be spelled in one way, such as the // in luck), while others are inconsistent (i.e.., their rhymes have two or even more possible spellings such as /i:p/ leap and jeep), to explore the potential effects of orthography on spoken language processing.

Figure 0.3: Bidirectional Flow of Information Between Sound and Spelling. Schematic representation of the mutual influence of sound and spelling in language processing adapted from Van Orden and Goldinger, 1994.

Around the same time Morais and colleagues (1979) observed a link between phonological processing and reading skills, Seidenberg and Tanenhaus (1979) demonstrated that sound-to-spelling consistency affects spoken word processing. Interested in the possible interaction between orthographic and phonological codes during spoken language processing, the authors manipulated the spelling and the pronunciation of word pairs participants were presented with in a rhyme judgement task: some words overlapped in both their pronunciation as well as spelling (e.g., pie - tie), while the others shared the pronunciation but differed on the spelling (e.g., pie - eye). Faster decision times observed for word pairs containing rhymes with consistent spellings and pronunciations, were interpreted as the evidence of orthographic involvement during spoken language processing (see also Donnenwerth-Nolan, Tanenhaus, & Seidenberg, 1981 for similar results using better matched stimuli). The same effects of orthographic consistency have later been observed in phoneme motioning (Dijkstra, Roelofs, & Fieuws, 1995), phoneme counting (Treiman & Cassar, 1997) as well as shadowing task (Ventura et al., 2004). Importantly, observing the same spelling effects in paradigms in which orthography is a priori not relevant for the task, demonstrates that these spelling effects were not due to participants recurring to and explicitly relying on their orthographic knowledge. One such example is a lexical decision study in which Ziegler and Ferrand (1998) observed significantly longer response times for words containing rhymes which can be spelled in only one way (e.g., // in globe can only be written as <obe> in English) as compared to words whose rhymes have two or even more possible spellings (e.g., // in name can be written as either <ame> or <aim>).

The robustness and the generalizability of this orthographic consistency effect (OCE) are supported by studies showing that, apart from languages with highly inconsistent phonology-to-spelling mappings such as English and French (Ziegler & Ferrand, 1998; Ziegler et al., 2008), sound-to-spelling inconsistency also affects speech perception in more consistent languages such as Portuguese (Ventura et al., 2004), and Spanish (Jevtović et al., 2022). In addition, studies tracing the developmental trajectory of the OCE in normally-developing readers as well readers with dyslexia demonstrate that the effect is indeed driven by orthographic knowledge. The OCE seems to be absent in pre-reading (Ziegler & Muneaux, 2007) and children with dyslexia (Miller & Swick, 2003), who either have not yet acquired the orthographic knowledge, or have failed to acquire it successfully. Finally, although less robust (Alario, Perre, Castel, & Ziegler, 2007), evidence for OCE has been found in several speech production studies (Damian & Bowers, 2003; Rastle, McCormick, Bayliss, & Davis, 2011; but see Alario et al., 2007).

In summary, the aforedescribed effects show that once acquired, orthographic knowledge affects spoken language processing. Nevertheless, despite the large amount of evidence in favour of the OCE, the origin and the locus of this effect are still being debated in the literature. According to one line of research, for example, OCE is driven by the automatic co-activation of orthographic representations during spoken language processing (Chéreau et al., 2007; Pattamadilok, Perre, Dufau, & Ziegler, 2009). This means that upon hearing a spoken word, its orthographic representation is automatically activated leading either to facilitation (for words with unique spellings such as name) or slower recognition time (if the word has more than one spelling such as globe). By contrast, the phonological restructuring account suggests that with literacy acquisition, and in particular, the acquisition of sound-to-spelling conversion rules, the nature of the phonological representations changes (Muneaux & Ziegler, 2004; Perre, Pattamadilok, Montant, & Ziegler, 2009). Phonological representations of words with one possible spelling become more fine-grained and salient once reading is acquired, thereby leading to their faster recognition relative to spoken words with several possible spellings. Importantly, the two accounts are not mutually exclusive as it could be the case that both the automatic activation as well as phonological restructuring are driving the OCE.

The present thesis aims to add to the previous literature by exploring the role of orthography in spoken word learning. Spoken words’ spellings have been shown to impact their auditory perception even when orthographic information is not relevant for the task. We thus set out to test whether orthographic knowledge can exert its effects during the acquisition of novel auditory word forms. Before presenting the objectives of the thesis, we will first discuss some previous literature investigating the link between orthography and novel word learning.

The role of orthography in word learning

Although the rate of learning novel words is not constant across the lifespan, as it tends to be the highest in the first years of life and during the schooling years (Anglin, Miller, & Wakefield, 1993), a vast amount of novel words are acquired all throughout adulthood (Brysbaert, Stevens, Mandera, & Keuleers, 2016; Nation & Waring, 1997). Despite its importance and omnipresence, the process of incorporating a novel word in the mental lexicon is not simple since it depends on the successful completion of several distinct steps. In particular, learning a novel word comprises acquiring a new phonological form (i.e., acquiring the knowledge about the sound of a particular word), acquiring the concept this novel word refers to (i.e., the semantics of a word), as well as learning the association between the two. In addition, the newly acquired word also needs to be integrated into the existing network of all already familiar words and the connections between them (McMurray, Kapnoula, & Gaskell, 2016).

A vast amount of psycholinguistic research has been dedicated to studying different factors that impact the abovedescribed learning process. For example, it has been shown that distributed learning (i.e., learning which is spread out over time) leads to better retention of novel word forms as compared to learning concentrated in one session (for a review, see Gerbier & Toppino, 2015). Similarly, experimental evidence shows that even though successful learning can occur incidentally (i.e., without the intention to learn), explicit instruction yields better learning outcomes (Batterink & Neville, 2011; Konopak et al., 1987; Sobczak & Gaskell, 2019). Finally, the importance of sleep for novel word acquisition and consolidation has repeatedly been shown in the literature (for a review, see Schimke, Angwin, Cheng, & Copland, 2021). Of particular importance for the present thesis however, are studies that looked into the role of orthography in novel vocabulary acquisition.

The idea that orthography may be involved in novel word acquisition, and can actually facilitate the learning process, comes from Ehri’s word identity amalgamation theory (Ehri, 1978). According to this theory, orthographic labels (i.e., words’ spellings) can be used as visual representations of phonological input, thus providing a way to symbolize (abstract) phonological representations when storing them in memory. Across four experiments Ehri and Wilce (1979) provided some of the first evidence supporting the ideas derived from the amalgamation theory. Namely, the authors reported a learning advantage for novel words acquired along with their orthographic representations - relative to those learned only via aural instruction - in both fist and second-grade English-speaking children. In addition, they described the conditions under which this advantage is most likely to occur. Firstly, they showed that spellings presented along with novel phonological forms have to represent the phonological input correctly, as misspellings can actually hinder the learning process. Secondly, and not surprisingly, they argued that in order to benefit from orthographic exposure, the children have to be able to decode the spelling correctly. They further demonstrated that the degree to which orthographic representations facilitate word learning positively correlates with children’s reading skills. Finally, and of particular importance for the work presented in this thesis, they reported a similar learning advantage when children were only instructed to imagine words’ spellings during the learning phase, but were not provided with any explicit orthographic information.

This link between orthographic information and word learning was further explored by Nelson, Balass and Perfetti (2005) who found that linking orthography to semantics is actually an easier task than linking semantic information to novel phonological input, thus supporting the ideas derived from Ehri’s amalgamation theory (Ehri, 1978). Indeed, all studies reporting the positive contribution of orthographic information during word learning, denoted as orthographic facilitation, agree that orthography serves as an additional (visual) memory trace facilitating the learning process (Miles, Ehri, & Lauterbach, 2016; Ricketts, Bishop, & Nation, 2009; Rosenthal & Ehri, 2008).

Furthermore, orthographic facilitation was studied in a variety of populations, and was found to be significant not only in normally-developing children and adults, but children with hearing impairments (Salins, Leigh, Cupples, & Castles, 2021) as well as those with dyslexia (Baron et al., 2018). Despite their difficulties with phonological decoding, children with dyslexia seem to be able to benefit from orthographic exposure during word learning, at least when no verbal response is required in the task (Baron et al., 2018). Finally, orthographic facilitation effects, measured as both the number of correctly recalled novel words as well as the latency of the retrieval, were shown to hold even when learning novel words in a second language (Bürki, Welby, Clément, & Spinelli, 2019).

In sum, all the aforementioned studies show positive effects of orthography on learning novel spoken words. However, these studies explicitly used orthography in the learning process, thereby providing participants with an additional memorization cue. The present thesis investigates how orthography impacts word learning indirectly, that is, when it is not directly implicated in the learning process. Since novel words can be acquired without (explicit) reference to orthography (i.e., entirely in the auditory domain), it could be the case that, due to lifelong experience with written language, orthographic effects emerge even when spellings are not involved in the learning process. This hypothesis will directly be tested across three experiments conducted in the course of the present thesis.

The current thesis

The main goal of the present thesis is to explore the influence of orthographic knowledge on spoken word learning in adult skilled readers. Contrary to early childhood, when spoken language is the only source of vocabulary acquisition, encountering novel words as an adult can occur either aurally (in spoken language), through reading (in written language) or through spoken and written language at the same time. Therefore, we set out to explore how the two codes (i.e., phonological and orthographic) interact in the course of spoken word learning. As there is now vast evidence showing that explicitly linking orthographic labels to novel phonological words forms aids the memorization process (see above), the focus of the thesis was on the implicit effects of orthography on the learning process (i.e., when orthography is not explicitly present during learning). Specifically, the questions explored in this thesis were mainly motivated by two separate lines of research:

- studies showing pervasive effects of orthography on spoken language processing (Seidenberg & Tanenhaus, 1979; Treiman & Cassar, 1997; Ziegler & Ferrand, 1998)

- studies showing a positive link between children’s oral vocabulary knowledge and their reading skills (Castles & Nation, 2008; McKague, Pratt, & Johnston, 2001; Nation & Snowling, 2004; Ouellette, 2006)

Since the studies showing orthographic effects in speech processing have already been discussed in detail, we will present the evidence in favour of the link between oral vocabulary knowledge and reading skills.

The relationship between oral vocabulary and reading

It is a well established finding that children’s phonological skills, and specifically, phonological awareness, positively correlate and predict future reading success (Rack, Hulme, Snowling, & Wightman, 1994; Wagner & Torgesen, 1987). Researchers studying both normally-developing, as well as children with dyslexia, seem to agree that tasks tapping into phonological awareness skills are not only related to better reading skills (Swank & Catts, 1994) but can differentiate between good and poor readers even before the onset of reading acquisition (Turan & Guel, 2008; for a review, see Snowling & Hulme, 2021). With this in mind, it is not surprising that recent years have seen the emergence of studies focusing on less-studied predictors of reading success, one of them being oral vocabulary knowledge (Nation & Snowling, 2004).

Evidence for a link between oral vocabulary knowledge and reading performance is found in studies employing designs that vary in the degree of experimental control, and in consequence, the level of causality one can draw regarding the relationship between the two (Hulme & Snowling, 2013). For instance, positive correlations between vocabulary knowledge and word reading have been observed in several cross-sectional studies in which participants’ standardized scores of oral vocabulary are used to predict reading success (Goff, Pratt, & Ong, 2005; Ouellette, 2006). Interestingly, the relationship between the two was found to be the strongest when reading words with irregular spellings (Ouellette & Beers, 2010; Ricketts, Nation, & Bishop, 2007).

More evidence for a link between oral vocabulary knowledge and reading performance is found when oral vocabulary knowledge is measured either at the early stages of reading acquisition, or even before its onset, and is used to predict future reading skills. In such a longitudinal study, which followed children from the age of 8.5 until 13, Nation and Snowling (2004) demonstrated that, in addition to phonological skills and listening comprehension skills, oral vocabulary knowledge accounted for unique variance in word reading scores. Contribution of orthography was found both the first time the testing was conducted, at the age of 8.5 years, as well as four and a half years later.

Finally, the most direct evidence for a link between oral vocabulary knowledge and reading success have been found in training studies using novel word material. One of the first studies to employ such a design was conducted with a group of fourth-grade English-speaking children. Children in this study were faster to read words previously acquired through aural training as compared to words they had never heard before, that is the untrained words (Hogaboam & Perfetti, 1978). Similar aural training advantage for reading previously acquired spoken words was found by McKague, Pratt, and Johnston (2001). The authors trained first-grade children on a set of novel spoken words and observed higher accuracy in reading words children had previously been trained on. However, while the two studies conducted with children showed that being familiar with a word’s pronunciation is enough for the training advantage in reading to occur (i.e., semantic knowledge did not yield an additional benefit), in a training study conducted with adult readers, both semantic and phonological training were found to be important for reading success (Taylor, Plunkett, & Nation, 2011). As in these studies the amount and the type of training with novel words was experimentally manipulated, their conclusions thus point to a causal rather than an associative role of oral vocabulary in future reading success. However, the mechanism by which oral vocabulary assist word reading, is yet to be fully understood.

Theoretical explanations of the link between oral vocabulary and reading

Although their main ideas and descriptions of how reading skills are acquired and developed differ considerably, most prominent theories of reading development agree that oral vocabulary assists word reading through a process underlying the acquisition of novel orthographic representations (i.e., orthographic learning; see Castles & Nation, 2008). For instance, according to Share’s self teaching hypothesis (Share, 1995, 1999, 2004, 2008) already existing phonological representations (i.e., pronunciations of familiar words) serve as a reference point to which reading attempts of unfamiliar words are compared during the process of grapheme-to-phoneme conversion. Successful decoding attempts lead to a match between the written input and the existing phonological representation in the lexicon, therefore reinforcing the orthographic learning. In the same vein, unsuccessful attempts benefit from the phonological feedback (coming from the existing phonological representation) which then guide the reader to the correct their pronunciation. Similarly, Ehri’s stage theory of reading development (Ehri, 2005, 2014) - which describes various stages of development children go through before becoming entirely skilled readers - postulates that, to form a novel orthographic representation, orthographic mapping between the spelling and the existing lexical representation, which contains both phonological and semantic information, has to occur. The beneficial role of oral vocabulary is seen as providing a semantic and phonological reference which guides the mapping or connecting process. Finally, according to the lexical quality hypothesis (Perfetti & Hart, 2001) phonological, orthographic as well as semantic information interact altogether in forming complete lexical representations of words in the mental lexicon. This then implies that better semantic and phonological representations should have a positive impact on orthographic learning (i.e., the process of generating a new orthographic representation).

Despite the important differences in how reading development is conceptualized - as a sequential process evolving through very specific stages (Ehri, 2005, 2014) or by acquiring orthographic representations at an item-level (Perfetti & Hart, 2001; Share, 1995) - all theories share the idea that the mechanism by which orthographic learning occurs operates at the moment of the first visual encounter with words’ spellings. Namely, they aim to explain how letters (graphemes) get converted into sounds (phonemes) during first visual encounter with familiar words. An alternative explanation of the link between oral vocabulary knowledge and reading, which has been getting more attention in the recent years, proposes that the mechanism underlying the creation of orthographic representations can actually start beforehand. By converting sounds (phonemes) to letters (graphemes), a mechanism similar to the one employed when reading and sounding out unfamiliar words (Share, 1995), functions in a reverse manner (from sounds to letter) and generates preliminary orthographic representations of already familiar spoken words (see Stuart & Coltheart, 1988). As a result, a person’s knowledge of phoneme-to-grapheme mappings can initiate orthographic learning even before the first visual exposure with the actual orthographic representations (i.e., words’ spellings).

Some of the first experimental evidence showing that orthographic expectations3 can be generated solely based on the novel phonological word form, comes from Johnston, McKague and Pratt (2004; see also McKague, Davis, Pratt, & Johnston, 2008). Across three experiments the authors demonstrated that English-speaking adults had encoded orthographic representations of novel words prior to the first visual encounter with words’ real spellings. They first trained their participants on meanings and pronunciations of novel words without exposing them to words’ spellings. Next, they presented participants with novel words’ spellings in a modified lexical decision task containing a masked priming manipulation. Specifically, to tease out any potential effects of orthographic priming during access to novel visual words, they created four different prime-target conditions: pairs which overlapped in their phonological form (e.g., <spaith> before <SPATHE>), orthographic form (e.g., <spanth> before <SPATHE>), both (e.g., <spathe> before <SPATHE>) or neither (e.g., <gormin> before <SPATHE>). Since novel words preceded by identical primes (i.e., those overlapping in both the phonological and orthographic form) yielded faster processing times as compared to those preceded by primes with phonological overlap only, the authors could argue that the newly created representations of spoken words were not entirely phonological. In addition, as the masked priming task relies on automatic lexical access (Forster & Davis, 1984), their finding further demonstrated that representations of novel words are automatically accessed using the same recognition mechanisms employed when accessing already existing orthographic representations (i.e., representations of familiar words). Of particular importance for the authors’ conclusions is the finding showing that novel words preceded by phonological primes and those preceded by purely orthographic primes yielded similar processing times. The latter were however processed significantly faster relative to novel words preceded by primes that were spelled using a completely different set of letters (e.g., <gormin> before <SPATHE>). The absence of purely phonologically mediated priming alongside the significant difference observed between orthographically same and different primes led the authors to conclude that these English adults had generated orthographic representations of novel spoken words during the learning phase. Notwithstanding, the authors themselves point out that participants could have imagined and generated spellings that differed from those they were later presented with. This leaves it unclear whether a single expectation was generated for each word or whether multiple expectations for alternative spelling patterns were considered.

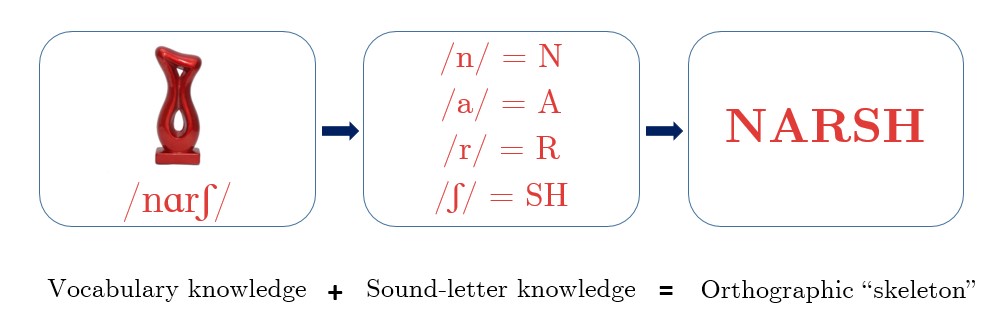

The orthographic skeleton hypothesis

Recently there has been more evidence demonstrating that preliminary orthographic representations can be generated solely based on phonological word forms. This account has been known as the orthographic skeleton hypothesis (see Beyersmann et al., 2021; Wegener et al., 2018, 2020). According to this hypothesis, knowledge of phoneme-to-grapheme mappings can be used to generate preliminary orthographic representations (hereafter orthographic skeletons) for novel words acquired through aural instruction (see Figure 0.4). Importantly, this happens even before the first visual encounter with novel words’ spellings.

Figure 0.4: Representation of the Orthographic Skeleton Hypothesis. Having a word in the oral vocabulary and knowing how sounds map onto letters will lead to the creation of a preliminary orthographic representation (i.e., the orthographic skeleton). For illustrative purposes we use American pronunciation of the example word.

The idea that oral vocabulary knowledge can assist word reading by generating orthographic skeletons was recently tested by Wegener and colleagues (Wegener et al., 2018). In their study, a group of English-speaking fourth-grade children was first trained on meanings (semantic training) and pronunciations (phonological training) of novel English-like words (e.g., /nesh/, /kb/, etc.). During the learning phase, which took place over two experimental sessions on two separate days, children were never exposed to orthographic input. Once the training was completed, children were presented with the novel words’ spellings in a sentence reading task and their eye-movements were measured. Importantly, words were presented either in their predictable (e.g., /nesh/ spelled as <nesh>) or unpredictable spellings (e.g., /kb/ spelled as <koyb> where <coib> would be more predictable). Along with the words children had previously been trained on (trained words), half of the sentences contained untrained words, again with predictable or unpredictable spellings. As the authors expected, and in line with previous research reporting a reading advantage for familiar spoken words (see above), there was a significant training effect. Trained words overall were read faster as compared to untrained words. The advantage was observed for all four eye-tracking measures: yielding shorter total reading times, shorter gaze durations, shorter first fixation durations, as well as fewer regressions in for trained words. Moreover, there was an overall facilitation for words shown in predictable spellings (e.g., <nesh>) as compared to those with unpredictable spellings (e.g., <koyb>). Finally, and crucially for the conclusions of the study, there was a significant interaction between training and spelling, again observed on all aforementioned measures. The interaction indicated that the facilitation for previously acquired words was present only when words were shown in their predictable spellings. Consequently, the authors interpreted this interaction between spelling predictability and training as evidence that children had generated orthographic expectations for all previously acquired words. Since the expectations children had generated for predictable trained words were in line with their real spellings, reading was facilitated. By contrast, the absence of facilitation for trained words with unpredictable spellings indicated a mismatch between orthographic skeletons children had generated for those words and the actual spellings they were later presented with.

These results were later replicated (using exactly the same procedure and materials) and expanded by showing that the effect of orthographic expectations persists even on the second encounter with the written forms of novel words (Wegener et al., 2020). However, after the third encounter, the facilitation effects disappear as children start updating their orthographic expectations based on their previous exposure with the real spellings. The authors of these two studies take these findings as a evidence that orthographic learning, that is, the process of acquiring novel orthographic representations, can start even before the first encounter with the written word forms. This is achieved through the creation of orthographic expectations (i.e., orthographic skeletons). Finally, using the same materials and word learning paradigm, it has been shown that the orthographic skeleton effects hold even when reading morphologically complex words containing stem participants acquired via aural instruction (Beyersmann et al., 2021). In addition, this study shows that adult readers (such as children tested until then) can also generate orthographic skeletons.

Along with Johnston et al. (2004), the authors of the aforementioned studies provide persuasive evidence for the orthographic skeleton account. They demonstrate that preliminary orthographic representations can be generated solely based on the words’ phonological properties. The present thesis will further explore the process by which preliminary orthographic representations are generated in the course of spoken word learning by asking the following three questions:

- Are orthographic skeletons generated for all novel words acquired through oral intruction or only for words with highly predictable spellings? By manipulating the number of novel words’ possible spellings this question will be experimentally tested in Chapter 1.

- Does generating orthographic skeletons depend on the complexity of phoneme-to-grapheme mappings (i.e., opacity of the language)? By comparing speakers of a language with simple, almost one-to-one mappings between sounds and letters (i.e., transparent languages such as Spanish) to those of a language in which this relationship is more complex since multiple sounds are associated to multiple letters, and vice versa (i.e., opaque languages such as French), this question will be experimentally tested in Chapter 2.

- What is the nature of orthographic effects in spoken word learning? Specifically, are orthographic skeletons generated automatically during spoken word learning or, does generating them representes a conscious process participants strategically engage in to facilitate word learning? By comparing implicit versus explicit spoken word learing, this question will be experimentally tested in Chapter 3.

References

Note that the term orthography denotes a more broader term than spelling. Namely, while spelling comprises phoneme-to-grapheme conversion rules, the term orthography refers to both spelling as well as the capitalization, punctuation, and word division allowed in a certain language. Given that the distinction between the two is usually not (explicitly) made in the psycholinguistic research, for the sake of consistency, in the present thesis spelling and orthography will also be used as synonyms.↩︎

Phonological and orthographic representations refer to both smaller units such as phonemes and graphemes, as well as larger units such as words. Therefore, an inconsistent sound is the one that maps onto multiple graphemes (e.g., the sound /k/ maps onto <c>, <qu> as well as <k>) and an inconsistent grapheme is the one that represents multiple sounds (e.g., the grapheme <c> represents both the sound /k/ as well as the sound /s/). Consistent sounds and graphemes by contrast, have one-to-one correspondences.↩︎

The term orthographic expectations is used to denote the orthographic representation of a word that has been constructed before the first visual encounter with its written form, that is, solely based on phonological properties of the word.↩︎